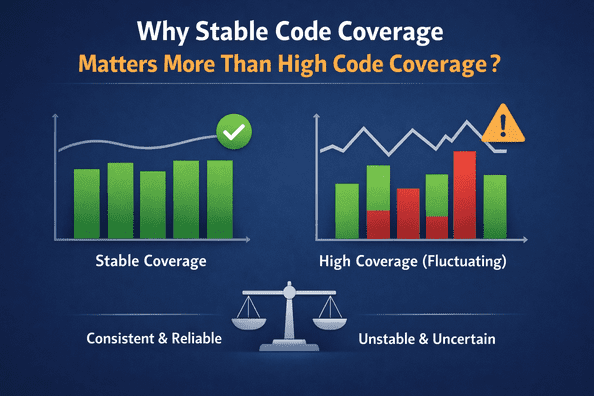

For years, code coverage percentages have been treated as a key indicator of test quality. Teams celebrate reaching 80%, 90%, or even higher coverage, often using these numbers as release gates or quality benchmarks. While code coverage is undeniably useful, an overemphasis on achieving high percentages can be misleading. In practice, stable code coverage often delivers far more value than chasing ever-increasing numbers.

Stability in code coverage reflects consistency, reliability, and meaningful test behavior over time. It helps teams understand whether their testing strategy is improving software quality or simply inflating metrics.

Understanding What Code Coverage Really Represents

Code coverage measures how much of the application code is executed during testing. It provides visibility into which parts of the codebase are exercised and which are untouched. However, coverage does not assess whether tests are meaningful, well-designed, or capable of detecting real defects.

A high code coverage number can exist alongside weak assertions, shallow tests, or excessive mocking. In such cases, coverage creates a false sense of security. Stable code coverage, on the other hand, reflects a mature test suite that evolves alongside the codebase.

The Problem With Chasing High Code Coverage

Pursuing higher code coverage percentages often leads teams to prioritize quantity over quality. Tests may be written simply to execute lines of code rather than validate important behavior. This approach results in tests that pass easily but fail to catch regressions.

Another issue is volatility. When teams chase high code coverage, small refactors or structural changes can cause sudden drops in metrics, even when behavior remains correct. This leads to unnecessary test rewrites and frustration, diverting effort away from real quality improvements.

High coverage targets can also discourage refactoring. Developers may avoid improving code because updating tightly coupled tests becomes too costly, increasing long-term technical and test debt.

What Stable Code Coverage Looks Like?

Stable code coverage means that coverage levels remain relatively consistent across releases, even as the system evolves. Minor changes do not cause dramatic fluctuations, and coverage trends align with real changes in application behavior.

Stability indicates that tests are resilient, focused on outcomes rather than implementation details. When coverage grows steadily over time instead of spiking artificially, it suggests that new functionality is being tested appropriately without over-testing trivial code paths.

Stable code coverage also makes metrics more trustworthy. Teams can use coverage trends to identify risk areas, rather than reacting to noise caused by superficial changes.

Why Stability Reflects Test Quality?

Test suites with stable code coverage tend to validate meaningful behavior. These tests focus on business rules, critical workflows, and integration points. Because they are less dependent on internal structure, they survive refactors and architectural changes.

This resilience is essential in long-lived projects. As systems grow and evolve, stable code coverage allows teams to move confidently, knowing that core functionality is protected without constant test maintenance.

In contrast, unstable coverage often signals brittle tests. Frequent spikes and drops indicate that tests are too tightly coupled to code structure or that coverage is being manipulated rather than earned.

Code Coverage as a Trend, Not a Target

One of the most effective ways to use code coverage is to observe trends rather than enforce rigid thresholds. Gradual improvement over time is far more meaningful than hitting an arbitrary number.

When coverage drops unexpectedly, it may indicate newly introduced untested logic or risky refactoring. When it increases steadily, it often reflects improved test practices and better coverage of real-world scenarios.

By treating code coverage as a diagnostic signal instead of a goal, teams gain actionable insights without creating unhealthy pressure.

Supporting Refactoring and Long-Term Maintainability

Stable code coverage plays a critical role during refactoring efforts. When coverage remains consistent, teams can confidently restructure code without fear of breaking hidden behavior. Tests that focus on outcomes rather than internals provide a safety net that encourages improvement instead of stagnation.

This is especially important in large codebases where frequent refactoring is necessary to control complexity. Stable coverage ensures that refactoring enhances quality rather than introducing subtle regressions.

Using Stable Code Coverage to Guide Testing Efforts

Rather than asking how high code coverage should be, a better question is where coverage matters most. Stable metrics help teams identify areas with consistently low coverage, which may represent risky or neglected parts of the system—especially when combined with insights from tools like Keploy that surface real execution paths from production traffic.

They also reveal dead code or obsolete features that no longer require testing. Over time, this clarity enables smarter test investment and better prioritization.

Conclusion

High code coverage numbers may look impressive, but they do not guarantee software quality. Stable code coverage provides a more reliable signal of test effectiveness, resilience, and long-term maintainability. It reflects tests that evolve with the system, protect critical behavior, and support confident change. By focusing on stability instead of chasing percentages, teams can build test suites that genuinely improve quality rather than inflate metrics. In the long run, stable code coverage is not just easier to maintain—it is far more valuable.